Mistral NeMo: New 12B AI Model with 128k Context Length

In a significant advancement in AI technology, Mistral, in collaboration with NVIDIA, has unveiled the Mistral NeMo, a 12 billion parameter (12B) model boasting a context length of up to 128,000 tokens. Released under the Apache 2.0 license, this model aims to set new standards in the field of AI.

Mistral NeMo is designed to be a drop-in replacement for systems currently utilizing Mistral's 7B model, leveraging a standard architecture to ensure ease of integration. Its state-of-the-art capabilities in reasoning, world knowledge, and coding accuracy make it a formidable tool in its category.

Performance and Comparisons

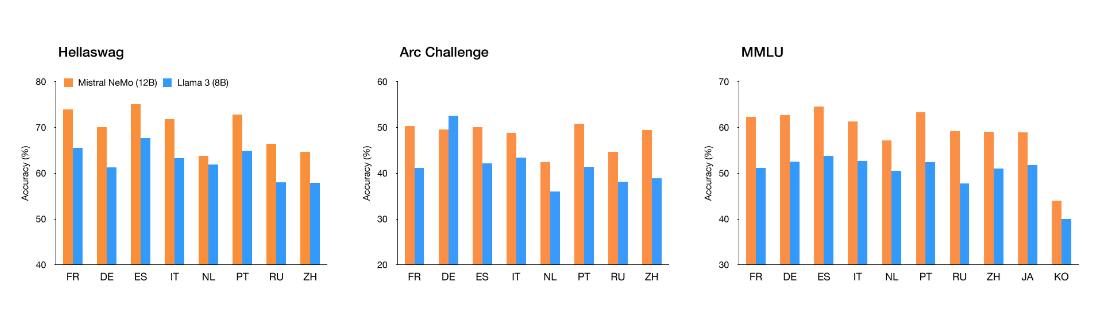

The model's performance is highlighted through a comparison with other recent open-source pre-trained models, such as Gemma 2 9B and Llama 3 8B. Notably, Mistral NeMo outperforms these models, particularly in multilingual benchmarks.

Multilingual and Compression Capabilities

Mistral NeMo excels in multilingual applications, supporting languages such as English, French, German, Spanish, Italian, Portuguese, Chinese, Japanese, Korean, Arabic, and Hindi. It introduces a new tokenizer, Tekken, which significantly improves text and source code compression efficiency compared to previous models.

Tekken was trained on over 100 languages, offering approximately 30% more efficiency in compressing source code, Chinese, Italian, French, German, Spanish, and Russian compared to the SentencePiece tokenizer. Furthermore, Tekken is two to three times more efficient at compressing Korean and Arabic, respectively. This advanced tokenizer enhances the overall performance and storage efficiency of Mistral NeMo, making it a robust tool for diverse linguistic applications.

Advanced Fine-Tuning and Alignment

Undergoing advanced fine-tuning and alignment phases, Mistral NeMo shows remarkable improvements over its predecessor, Mistral 7B. It demonstrates enhanced capabilities in following precise instructions, reasoning, handling multi-turn conversations, and generating code.

Availability and Adoption

Pre-trained base and instruction-tuned checkpoints are available on HuggingFace, promoting widespread adoption among researchers and enterprises. The model is also available on la Plateforme under the name open-mistral-nemo-2407, and it is packaged as an NVIDIA NIM inference microservice.

The Future of Small Language Models

Mistral NeMo represents a significant step forward in the trend of developing powerful yet efficient small language models. This release continues Mistral's tradition of open-source contributions, positioning it as a strong competitor against industry giants.

For more information and to try Mistral NeMo, visit ai.nvidia.com.