Google DeepMind has unveiled groundbreaking research on robot navigation using the advanced capabilities of Gemini 1.5 Pro. By leveraging the large context window of Gemini 1.5 Pro, robots can now comprehend and navigate complex environments based on human instructions.

Watch this demo by Google DeepMind - and explore more by following their thread on X:

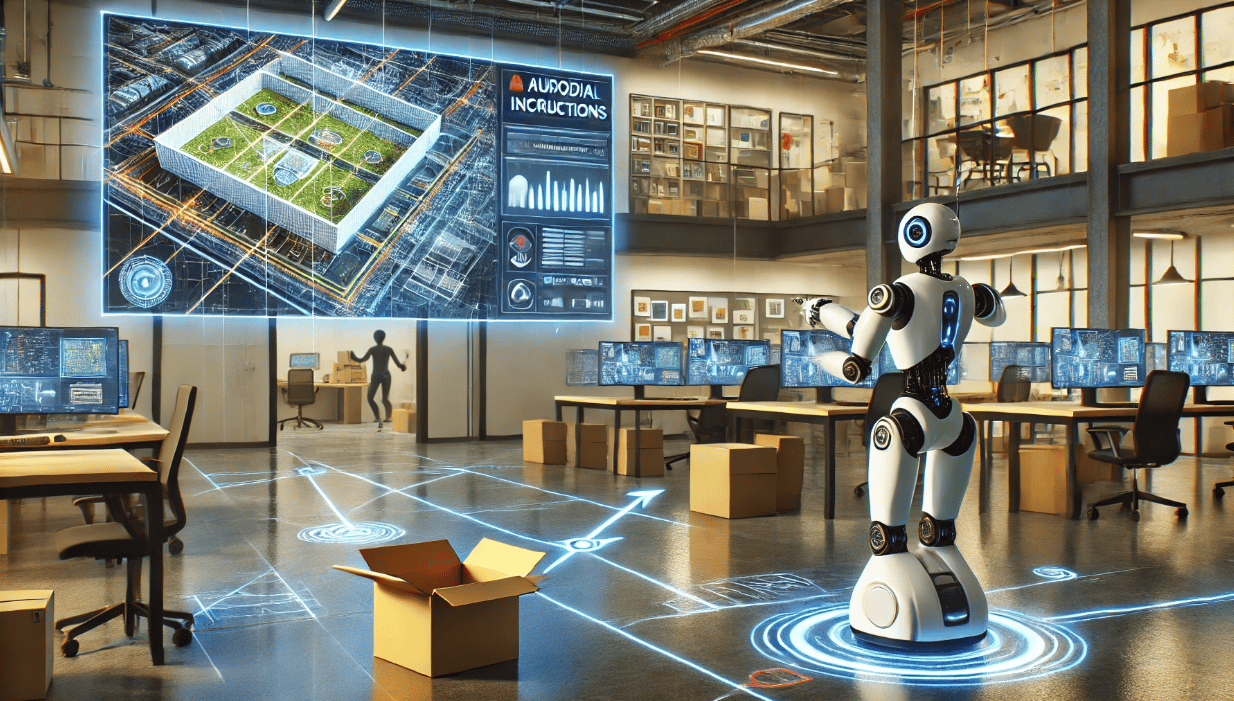

DeepMind’s latest innovation, called “Mobility VLA,” integrates Gemini’s 1 million token context with a map-like representation of spaces, resulting in powerful navigation frameworks. The process begins with robots receiving a video tour of an environment, where key locations are verbally highlighted. The robots then construct a graph of the space using the video frames.

During testing, robots successfully responded to multimodal instructions, including map sketches, audio requests, and visual cues such as a box of toys. The system also supports natural language commands like "take me somewhere to draw things," enabling robots to lead users to suitable locations.

The combination of multimodal capabilities and extensive context windows is set to unlock extraordinary use cases. Google's 'Project Astra' demo hinted at future possibilities for voice assistants that can see, hear, and think. Embedding these functions within robots takes this vision to a whole new level.

How Gemini 1.5 Pro Enhances Robot Navigation

Traditional AI models often struggle with limited context lengths, making it challenging to recall environments accurately. However, Gemini 1.5 Pro's 1 million token context length allows robots to utilize human instructions, video tours, and common sense reasoning to navigate spaces effectively.

In practical applications, robots are taken on tours of specific areas in real-world settings, with key places highlighted, such as "Lewis’s desk" or "temporary desk area." They are then tasked with leading users to these locations. The system's architecture processes these inputs and creates a topological graph, a simplified representation of the space, constructed from video frames. This graph captures the general connectivity of surroundings, enabling the robot to find paths without a physical map.

Robots were also provided with multimodal instructions, including:

📝 Map sketches on a whiteboard

🗣️ Audio requests referencing places from the tour

🎲 Visual cues like a box of toys

These inputs allowed the robots to perform a variety of actions for different users. In tests, robots completed 57 types of tasks across a 9000+ square foot operating area, showcasing the next step in human-robot interaction.

Read more about their research.

Looking ahead, users could simply record a tour of their environment with a smartphone, enabling their personal robot assistant to understand and navigate the space efficiently.