Image Source: ChatGPT-4o

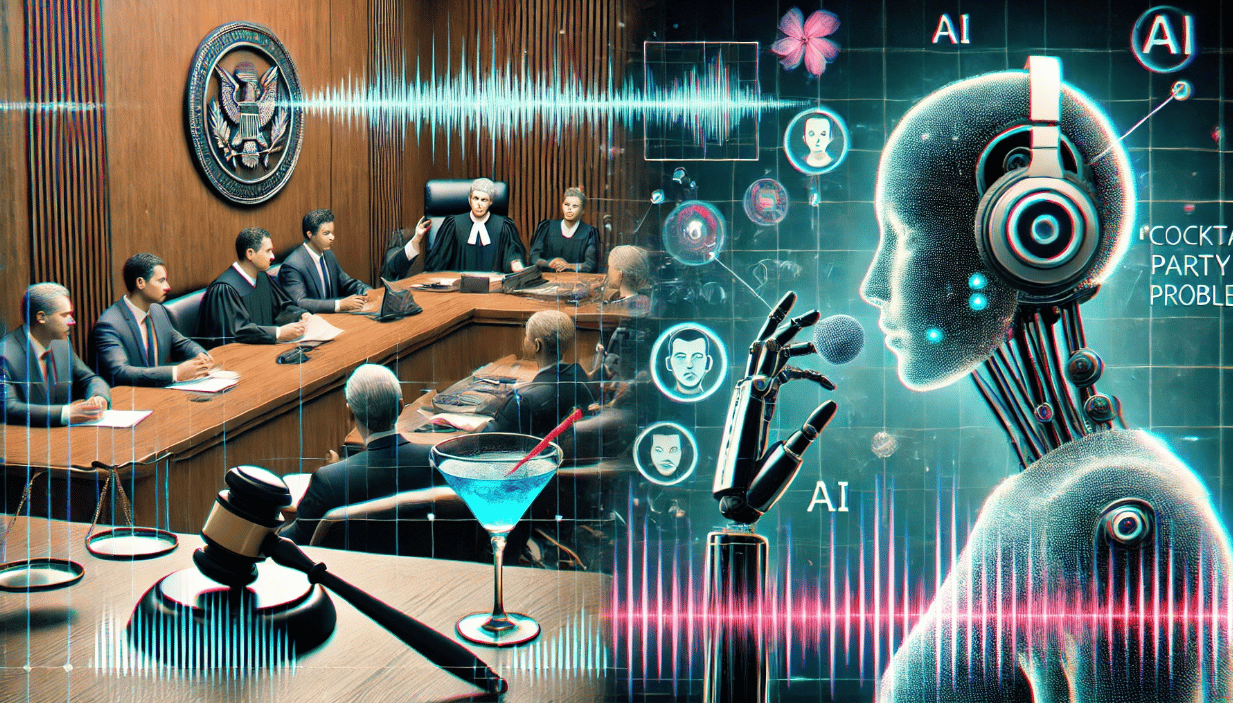

AI Solves 'Cocktail Party Problem,' Becomes Key Evidence in Court

The "cocktail party problem" refers to the difficulty of hearing one conversation in a crowded, noisy room—a task humans manage with ease but technology has struggled to replicate. This issue has significant implications in areas like courtroom audio evidence, where background noise can make recordings hard to use. However, AI is now providing a breakthrough solution.

The Origins of the AI Solution

Electrical engineer Keith McElveen, founder and Chief Technology Officer of Wave Sciences, first encountered the cocktail party problem while working on a U.S. government war crimes case. The evidence included recordings where multiple voices overlapped, making it hard to determine who was speaking. McElveen, already adept at removing non-speech noises like fans and air conditioners, found separating overlapping voices to be an even tougher challenge.

"Sounds bounce around a room, and solving it mathematically is horrible to solve," McElveen explained.

The key to overcoming this issue was using AI to pinpoint and screen out competing sounds based on their origin in the room. In a perfect, echo-free environment, one microphone per speaker would suffice, but real-world rooms introduce reflections that complicate the problem.

Developing AI-Driven Sound Separation

McElveen founded Wave Sciences in 2009 to develop technology capable of separating overlapping voices. Initially, the company employed a method known as array beamforming, which required numerous microphones to capture sound from multiple directions. However, commercial partners found this solution too costly and impractical.

After a decade of research, Wave Sciences filed a patent in September 2019 for an AI-based solution that analyzes how sound bounces around a room before reaching the microphone. The AI system tracks where each sound comes from and suppresses any noise that doesn't match the location of the target speaker.

AI in Court: A Breakthrough in Audio Forensics

Wave Sciences’ AI solution made its forensic debut in a U.S. murder case involving two hitmen, where the evidence was able to provide proved central to the convictions. The FBI needed to prove the hitmen were hired by a family embroiled in a custody dispute. While phone records were relatively easy to access, in-person conversations in noisy restaurants during meetings presented a challenge. With the help of Wave Sciences’ AI algorithm, previously unusable audio became pivotal evidence in court.

Since then, the technology has undergone extensive testing by government labs, including those in the UK, and is now being marketed to the U.S. military for analyzing sonar signals. It may also have applications in hostage negotiations and suicide prevention, where both sides of a conversation need to be clearly heard.

Expanding Applications Beyond Forensics

Late last year, Wave Sciences released a software application using its AI algorithm for government labs to conduct audio forensics and acoustic analysis. The company also plans to introduce tailored versions for voice interfaces in cars, smart speakers, augmented reality, and hearing aids. AI’s ability to separate voices from noise could revolutionize how we interact with technology in noisy environments.

For example, your car or smart speaker could accurately interpret your voice, even in a loud setting.

AI in Audio Forensics: A Growing Field

AI is increasingly being used in forensics. According to forensic educator Terri Armenta, machine learning models analyze voice patterns to determine speaker identities, a valuable tool in criminal investigations. Additionally, AI can detect manipulations in audio recordings, ensuring the integrity of evidence presented in court.

The Future of AI-Driven Audio Analysis

Other companies are also exploring the potential of AI in audio analysis. Bosch, for example, developed SoundSee, which uses audio signal processing algorithms to predict motor malfunctions by analyzing the sound of machines.

As for Wave Sciences’ algorithm, tests have shown that even with just two microphones, the technology performs as well as human hearing. McElveen believes that their AI solution may even mimic how the human brain processes sound.

“We suspect that the human brain may be using the same math,” says McElveen. “In solving the cocktail party problem, we may have stumbled upon what's really happening in the brain."